Advanced Micro Devices, Inc. (NASDAQ: AMD) is mounting a significant challenge to NVIDIA’s (NASDAQ: NVDA) market dominance in the multi-billion dollar Artificial Intelligence (AI) accelerator market. AMD’s strategy revolves around a two-pronged attack: delivering competitive, memory-dense hardware and building an open software ecosystem to reduce the barriers for customers looking to diversify their AI infrastructure.

Table of Contents

AMD’s AI Strategy: The Open Hardware-Software Stack

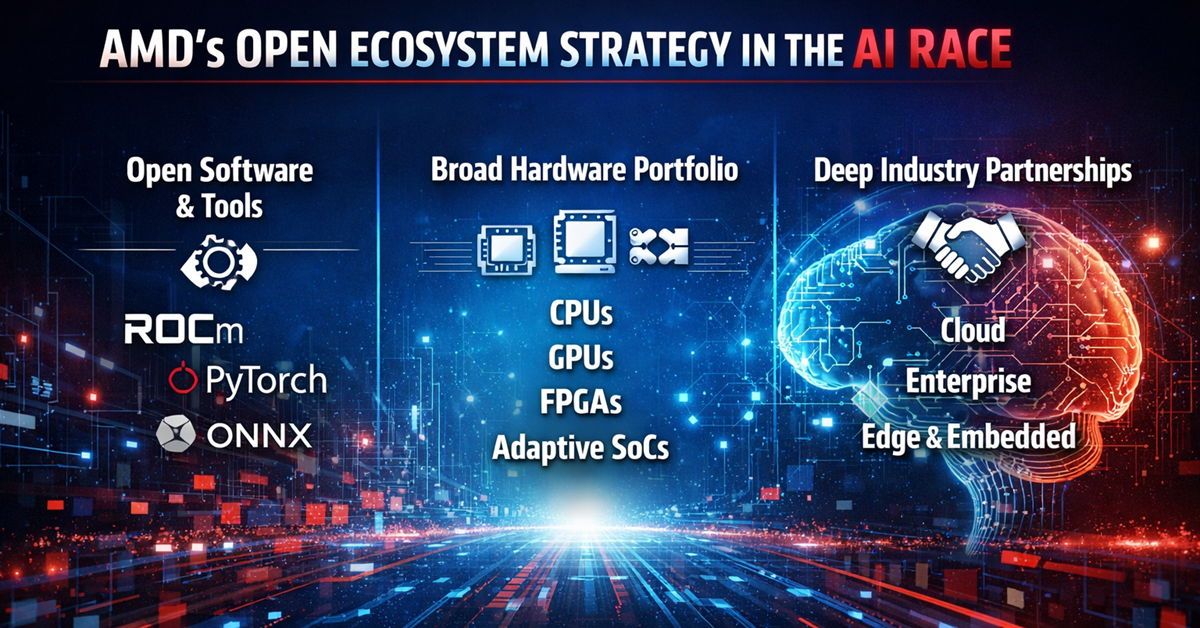

AMD’s core AI strategy is to be the credible, high-performance alternative to NVIDIA by offering a compelling combination of technology and accessibility:

- Leadership Hardware (Instinct Accelerators): The centerpiece of AMD’s hardware push is the Instinct MI300 series, particularly the MI300X and the upcoming MI325X and MI350 series. These accelerators are designed using a multi-chip module (chiplet) approach that allows for massive memory capacity and bandwidth. The MI300X, for example, boasts 192GB of HBM3 memory, which is significantly larger than its primary competitor, the NVIDIA H100.

- The Open Ecosystem (ROCm): NVIDIA’s proprietary CUDA software has long been its primary moat. AMD is countering this with its ROCm (Radeon Open Compute) platform, an open-source software stack that supports major AI frameworks like PyTorch and TensorFlow. The open nature of ROCm resonates with cloud providers (hyperscalers) and large AI companies who desire flexibility, cost-effectiveness, and the ability to customize their infrastructure without being locked into a single vendor.

- Strategic Partnerships: AMD is leveraging deep collaboration with major hyperscalers and AI leaders, including Microsoft, Meta, Oracle, and OpenAI. These partnerships are critical as they provide direct feedback to refine the ROCm software stack and validate the performance of the Instinct chips in the most demanding AI environments.

Competition with NVIDIA: Where AMD Shines

NVIDIA currently holds an estimated 90%+ market share in the data center GPU space, primarily due to the maturity and ubiquity of its CUDA ecosystem, which facilitates AI training tasks.

AMD is strategically aiming to capture share by focusing on:

- AI Inference Workloads: This is where AMD truly shines. Inference—the act of running a trained model (like a chatbot) for end-users—is typically done with smaller batch sizes and is heavily dependent on memory capacity and latency.

- Large Language Models (LLMs): The MI300X’s massive memory capacity (192GB) allows very large models (70-150 billion parameters) to be loaded entirely onto a single GPU, simplifying deployment and reducing latency. Benchmarks suggest the MI300X can offer a significant latency advantage (up to 40% in some scenarios) and better cost efficiency for specific large-scale inference and very small batch size use cases compared to the H100.

- Cost-Effectiveness: AMD’s chips are generally marketed as a more cost-effective option than their NVIDIA counterparts. As hyperscalers seek to manage capital expenditure (capex) costs amid the massive AI infrastructure buildout, a high-performance, lower-cost alternative becomes highly attractive.

The main battle is the software ecosystem. While ROCm is improving rapidly, breaking the entrenched developer loyalty to CUDA remains AMD’s biggest long-term challenge.

Stock Outlook for 2026 (NASDAQ: AMD)

The stock outlook for AMD in 2026 is overwhelmingly tied to the successful commercialization and revenue ramp of its Instinct data center GPU business. Financial projections reflect significant optimism:

- Data Center Revenue Acceleration: AMD is targeting a Compound Annual Growth Rate (CAGR) for its Data Center business exceeding 60% over the next several years, suggesting a massive acceleration in revenue from its current base. This implies that the MI300 series and its successors are expected to generate tens of billions in annual revenue, up from an estimated $5 billion+ in 2024.

- Earnings Growth: Consensus analyst expectations suggest AMD’s bottom-line growth rate could nearly triple in 2026, with earnings per share (EPS) estimates in the range of $6.44 per share. This projected growth is driven by the high-margin nature of the data center AI business and continued strength in its CPU and AI PC segments.

- Valuation and Risk: Despite a strong stock performance, AMD’s valuation (Price-to-Earnings ratio) is currently high, reflecting the market’s bullish sentiment on its future AI potential. However, if the company successfully executes on its data center growth targets and the ROCm ecosystem gains critical mass, the stock is positioned for further upside. The primary risk remains execution delay in the AI roadmap and the possibility of a general slowdown or “bust” in the overall AI infrastructure spending if adoption rates moderate in 2026.

In summary, the stock’s performance through 2026 is contingent on proving that the Instinct platform can capture a meaningful, non-trivial share (e.g., 20% to 30%) of the accelerating cloud AI infrastructure market.